TheMoon Botnet Resurfaces, Exploiting EoL Devices to Power Criminal Proxy

A botnet previously considered to be rendered inert has been observed enslaving end-of-life (EoL) small home/small office (SOHO) routers and IoT devices to fuel a criminal proxy service called Faceless.

“TheMoon, which emerged in 2014, has been operating quietly while growing to over 40,000 bots from 88 countries in January and February of 2024,” the Black Lotus Labs team at Lumen Technologies said.

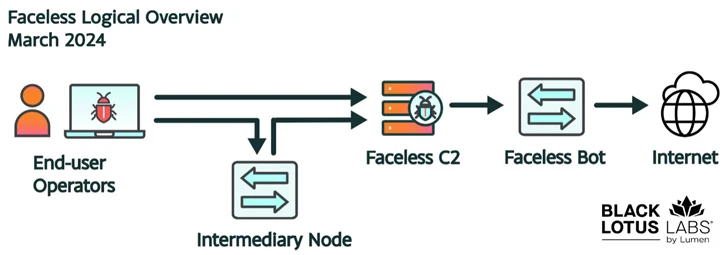

Faceless, detailed by security journalist Brian Krebs in April 2023, is a malicious residential proxy service that’s offered its anonymity services to other threat actors for a negligible fee that costs less than a dollar per day.

In doing so, it allows the customers to route their malicious traffic through tens of thousands of compromised systems advertised on the service, effectively concealing their true origins.

The Faceless-backed infrastructure has been assessed to be used by operators of malware such as SolarMarker and IcedID to connect to their command-and-control (C2) servers to obfuscate their IP addresses.

That being said, a majority of the bots are used for password spraying and/or data exfiltration, primarily targeting the financial sector, with more than 80% of the infected hosts located in the U.S.

Lumen said it first observed the malicious activity in late 2023, the goal being to breach EoL SOHO routers and IoT devices and deploy an updated version of TheMoon, and ultimately enroll the botnet into Faceless.

The attacks entail dropping a loader that’s responsible for fetching an ELF executable from a C2 server. This includes a worm module that spreads itself to other vulnerable servers and another file called “.sox” that’s used to proxy traffic from the bot to the internet on behalf of a user.

In addition, the malware configures iptables rules to drop incoming TCP traffic on ports 8080 and 80 and allow traffic from three different IP ranges. It also attempts to contact an NTP server from a list of legitimate NTP servers in a likely effort to determine if the infected device has internet connectivity and it is not being run in a sandbox.

The targeting of EoL appliances to fabricate the botnet is no…