Sinister AI ‘eavesdropping’ trick lets ‘anybody read private chats’ on your Android or iPhone, security experts reveal

CYBERCRIMINALS can spy on users’ conversations with artificial intelligence-powered chatbots, experts have warned.

Ever since ChatGPT came out in November 2022, cybersecurity experts have been concerned with the technology.

ChatGPT is an advanced chatbot that can seamlessly complete tasks like writing essays and generating code in seconds.

Today, several chatbots function like ChatGPT, including Google’s Gemini and Microsoft’s Copilot within Bing.

The chatbots are easy to use, and many users quickly get captivated into conversations with the natural-language companions.

However, experts have expressed concerns over users sharing personal information with AI chatbots.

ChatGPT can collect highly sensitive details users share via prompts and responses.

It can then associate this information with a user’s email address and phone number, and store it.

That’s because to use the platform, users need to provide both an email address and mobile phone number.

Users cannot bypass this by using disposable or masked email addresses and phone numbers.

Most read in Phones & Gadgets

As a result, ChatGPT is firmly tied to your online identity as it records everything you input.

What’s more, this private data can also be obtained by cybercriminals if they are keen enough.

“Currently, anybody can read private chats sent from ChatGPT and other services,” Yisroel Mirsky, the head of the Offensive AI Research Lab at Israel’s Ben-Gurion University, told Ars Technica in an email.

“This includes malicious actors on the same Wi-Fi or LAN as a client (e.g., same coffee shop), or even a malicious actor on the internet — anyone who can observe the traffic.”

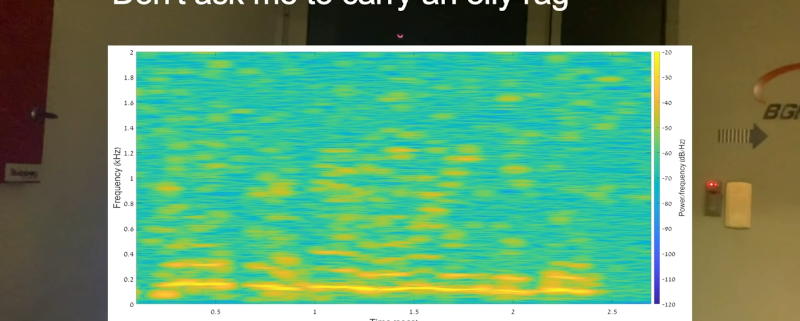

This is known as a “side-channel attack,” and it can be very dangerous for victims.

“The attack is passive and can happen without OpenAI or their client’s knowledge,” Mirsky revealed.

“OpenAI encrypts their traffic to prevent these kinds of eavesdropping attacks, but our research shows that the way OpenAI is using encryption is flawed, and thus the content of the…